RESEARCH

Below are some of the research directions that our group is currently pursuing. If you'd like to discuss or collaborate on these, don't hesitate to get in touch.

Creating complex software is difficult, and so people have started using machine learning (ML) to generate software instead of writing it manually. The problem: ML-produced software is opaque to humans, and it is difficult to certify that it always behaves correctly. In safety-critical systems, i.e. autonomous vehicles, this raises serious concerns. As part of the Reluplex/Marabou project, we seek to develop techniques and tools that will allow us to formally reason about deep neural networks (a specific kind of ML-produced software), and in particular to verify that they are correct.

Research on neural network verification is still at an early phase, and there are many challenges to overcome. First, there is the matter of scalability: the verification problem is NP-complete, i.e. is exponentially difficult in the size of the network, and so it is challenging to apply verification to large networks. Our research group is addressing this difficulty by applying state-of-the-art techniques from the fields of verification, linear programming and SMT solving.

Another interesting topic is that of applications: verification of neural networks is a useful hammer, and we are just beginning to discover all the potential nails. One project where we've successfully applied this technique is the ACAS Xu system: an airborne collision-avoidance systems for drones, currently being developed by the US Federal Aviation Administration (FAA). The ACAS Xu system includes 45 deep neural networks, and it is very important that these networks behave correctly as to avoid potential mid-air collisions. Using verification, we were able to formally prove that this is indeed the case (for a list of desired properties), and in one case even detected a bug. Another interesting application is robustness to adversarial examples, where we use verification to prove that small perturbations to a network's inputs do not cause it to make mistakes. Additional examples include network simplification and watermark removal.

For a high-level introduction to our work in this field, check out this press release. You can also have a look at this paper (along with code mentioned therein) or watch this tutorial (part 1, part 2) that I gave at the 2018 Oxford Summer School on Foundations of Programming and Software Systems. The Marabou project, which encapsulates much of the group's ongoing research, is freely available online. (The earlier Reluplex project is no longer maintained.)

Neural Network Verification (Reluplex & Marabou)

Scenario-Based Programming

(Wise Computing)

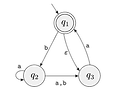

Software is usually written in high-level languages, such as C++ or Python, that afford many convenient programming constructs. Still, the resulting software is often erroneous or fails to meet its goals. One of the reasons for the difficulty of programmers to "get it right" is that programming is still carried out in a way that is not straightforward to humans, and is not aligned with how they think. The Scenario-Based Programming (SBP) paradigm aims to help programmers by letting them program using scenarios, which are building-blocks that are better aligned with how they mentally perceive software. Variants of SBP have appeared in several programming languages, including Live Sequence Charts and Behavioral Programming.

Apart from making things easier for programmers, SBP tends to produce software that has a simple and well-defined structure. This, in turn, makes SBP software more amenable to formal methods and analysis tools. Specifically, tasks such as compositional verificaiton, program repair, property extraction and distribution across nodes, which are very difficult in general, are sometimes significantly simpler for SBP software. These capabilities can help mitigate the still-significant difficulties of producing correct software.

Wise Computing is a broad, long-term project that seeks to take these tools and capabilities to the next level, by making the computer an equal member of the system-development team - continuously involved and making proactive contributions. In a nutshell, the goal is to create an encompassing tool suite that will accompany system design, development and maintenance from A to Z, leading to significantly more robust and adaptive software.

This ambitious project is still at an early stage, and our group is currently working on producing a basic tool-suite and applying it to small and medium examples, as a proof-of-concept. For more details, see this paper.